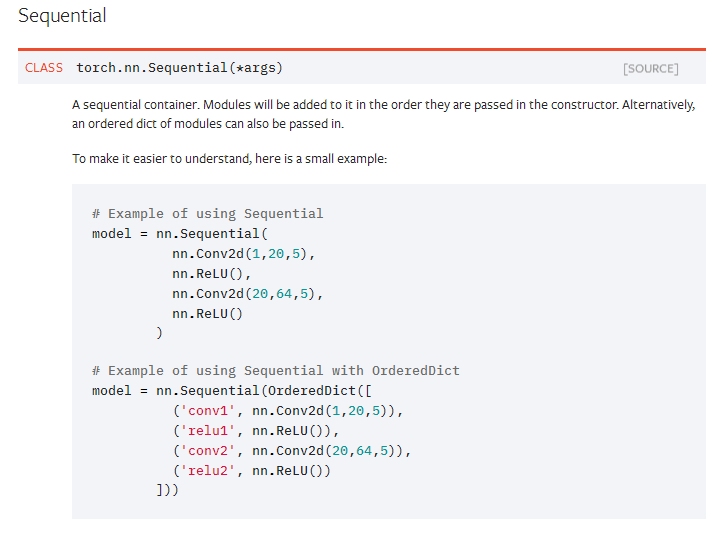

The NN seems to not capture the non-linearity of $cos(x)$ at the right end of the interval $$. The reason behind this is the asymmetry I found in the approximation of $cos(x)$ with this NN over $$. In the preparation of the training set we didn’t use $T=2\pi$ for the $cos(x)$ function, instead we used $3\pi$. Torch ::save(y_model_sequence, "y_model_sequence.pt") Torch ::mse_loss(validation_values, y_validation) import torch.nn as nn model nn.Sequential( nn. VALIDATE THE MODEL WITH THE VALIDATION SET Since the torch model has layers that pytorch doesnt support(such as inception. Validating the modelįor the validation, we use the last 30% of the randomly shuffled indices The accumulation (or sum) of all the gradients is calculated when. The gradient for this tensor will be accumulated into. This happens on subsequent backward passes. requires_grad as True, the package tracks all operations on it. When you create a tensor, if you set its attribute. Torch.Tensor is the central class of PyTorch. PyTorch accumulates weight gradients of the network on subsequent backward propagations, so optimizer.zero_grad() is called to zero the gradients in order to ensure previous passes do not influence the direction of the gradient. << ", max(loss_values) = " << max_loss << endl Report the error with respect to y_training.ĭouble max_loss = loss_values.max().item () Loss_values = torch ::mse_loss(training_prediction, y_training) What happens behind the scenes is that it invokes forward to perform forward propagation (also called forward computation).īlocks initialize the parameters in a lazy fashion as part of the first forward call.Ofstream conv_file ( "convergence_data.csv") It allows us to invoke a block via net(X) to obtain the desired output. This is typically encoded in what we will call the forward function. For instance, the block above contains two hidden layers, and we need a place to store parameters for it.īlocks produce meaningful output. In the following we will explain the various steps needed to go from defining layers to defining blocks (of one or more layers):īlocks store state in the form of parameters that are inherent to the block. These blocks can be combined into larger artifacts, often recursively. In the following we will see that this really just constructs a block What exactly happens inside nn.Sequential has remained rather mysterious so far. In particular, we used the nn.Sequential constructor to generate an empty network into which we then inserted both layers. This generates a network with a hidden layer of \(256\) units, followed by a ReLU activation and another \(10\) units governing the output. Dense ( 256, activation = 'relu' )) net. Real-time Object Detection with MXNet On The Raspberry Piįrom mxnet import nd from mxnet.gluon import nn x = nd.Deploy into a Java or Scala Environment.

Optimizing Deep Learning Computation Graphs with TensorRT.Running inference on MXNet/Gluon from an ONNX model.Train a Linear Regression Model with Sparse Symbols.RowSparseNDArray - NDArray for Sparse Gradient Updates.CSRNDArray - NDArray in Compressed Sparse Row Storage Format.An Intro: Manipulate Data the MXNet Way with NDArray.

Appendix: Upgrading from Module DataIter to Gluon DataLoader.Automatic differentiation with autograd.

0 kommentar(er)

0 kommentar(er)